Hello, and welcome. We’re at a turning point where AI is reshaping today’s world, and quantum computing promises to change tomorrow’s. This memo dives into how these two powerful forces intersect, helping you make sense of the opportunity and uncertainty ahead.

Executive summary

AI infrastructure is entering a sustained, high‑investment decade. Data center GPU and AI chip markets are projected to reach the high hundreds of billions of dollars by 2030, with some forecasts putting AI chips for data centers and cloud alone above USD 400 billion, confirming that AI infrastructure is not a short‑lived bubble but a long build‑out cycle.

Quantum computing has crossed from hype into verifiable technical advantage—but not yet broad commercial impact. Google’s Willow chip and the Quantum Echoes algorithm demonstrated a roughly 13,000× speedup over a top supercomputer on a real physics simulation with verifiable outputs, while the total quantum market is still projected at only tens of billions by 2030.

Leaders need a bimodal strategy: scale AI now, experiment with quantum deliberately. The most resilient posture is to keep compounding value from classical AI infrastructure while treating quantum as a structured options portfolio—small, targeted experiments, talent building, and selective partnerships, rather than all‑in bets.

Market map and key players

The computing landscape now has two active frontiers: classical AI infrastructure, where value and spend are already large and visible, and quantum computing, where value is emerging from a much smaller base.

Incumbents in classical AI.

Nvidia dominates the data center GPU market with an estimated majority share and a deep software moat via CUDA and its ecosystem, positioning itself as the default platform for AI training and inference workloads.

Hyperscalers like Amazon, Google, and Microsoft are integrating Nvidia and AMD GPUs with their own accelerators (e.g., TPUs, Trainium) to vertically integrate AI services while trying to avoid over‑dependence on any single vendor.

Disruptors and quantum pioneers.

AMD is pushing hard into AI GPUs with a public strategy to win a significant slice of what it calls a trillion‑dollar compute opportunity, including recent wins that signal buyers’ desire for genuine second sources.

In quantum, Google’s Willow, IBM’s quantum roadmap, European players like IQM, and regionally backed initiatives in countries such as France, Saudi Arabia, and India represent a fast‑growing but fragmented field racing toward scalable hardware and cloud‑accessible quantum services.

Enablers and policy shapers.

Cloud providers act as aggregation layers, offering access to multiple AI accelerators and early‑stage quantum systems through a single platform, abstracting hardware complexity for enterprises.

Governments are committing national strategies and billions in funding for both AI data centers and quantum fabs, seeing compute capability as a strategic asset on par with energy and semiconductors.

Data analysis and benchmarks

Optimism is justified on the AI side because the demand and spending curves are already visible; caution is justified on both AI and quantum because of concentration risk, capex intensity, and uncertainty about which technical paths will win.

Classical AI: big base, strong but not risk‑free growth.

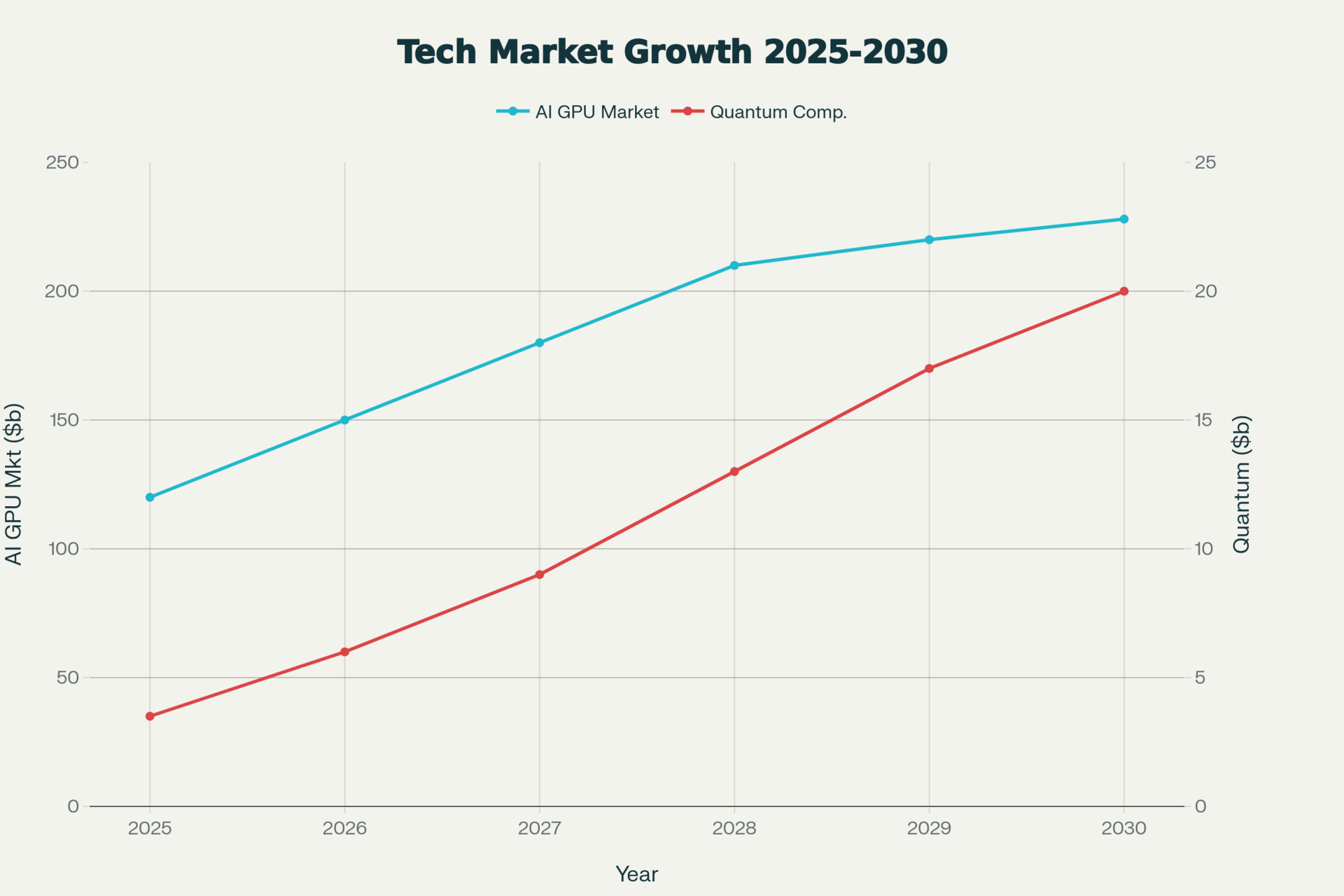

The global data center GPU market is projected to roughly double toward the end of the decade, with estimates putting it above USD 200 billion by 2030 as AI workloads proliferate across industries.

Broader AI chips for data center and cloud are forecast to exceed USD 400 billion by 2030, implying sustained double‑digit annual growth and confirming that AI infrastructure is becoming one of the largest capex lines in enterprise IT and hyperscale budgets.

Quantum: small today, fast‑growing, highly uncertain distribution.

Quantum computing market estimates cluster around low tens of billions of dollars by 2030, from a low single‑digit billion baseline today, implying very high compound annual growth but from a tiny base.

Google’s Willow chip, via the Quantum Echoes algorithm, achieved a task (an out‑of‑time‑ordered correlator simulation) in hours that would take a leading classical supercomputer years, and crucially, with results that can be independently verified, marking a meaningful step beyond earlier, contested “supremacy” claims.

Enterprise AI adoption and the risk of undifferentiated use.

Enterprise surveys show that a large majority of organizations now report some form of AI or generative AI in production, often above 70–80%, with adoption concentrated in customer service, analytics, and software development assistance.

This broad adoption means that simply “using AI” no longer differentiates; the differentiator shifts toward proprietary data, tightly integrated workflows, and the ability to manage AI and, eventually, quantum as part of a coherent compute portfolio.

Decision checklist and strategic scenarios

An optimistic but cautious leader does not try to predict exactly which chip or quantum architecture will win; instead, they design a portfolio and process that can adapt as evidence arrives.

Decision checklist for the next 12–24 months

1. Infrastructure concentration risk.

How exposed are critical workloads to a single AI accelerator vendor or cloud region, and what is the plan to introduce at least one alternative path (e.g., second GPU vendor, second cloud, or on‑prem option) without exploding complexity?2. Workload–hardware fit.

Have you matched workload classes (training vs. inference; latency‑sensitive vs. batch; tabular vs. multimodal) to appropriate hardware tiers, rather than defaulting all investment to the most expensive flagship GPUs?3. Quantum relevance mapping.

Which of your top R&D or analytic problems are inherently about optimization, complex simulation, or cryptography—areas where quantum techniques are most likely to offer an eventual edge?4. Talent and learning pipeline.

Is there at least a small, cross‑functional group (engineering, data science, security, strategy) explicitly tasked with tracking quantum and advanced AI hardware, running small experiments, and reporting implications in business terms?5. Pilot portfolio design.

Do you have 1–3 low‑risk pilots scoped with cloud‑hosted quantum or hybrid services—clear success metrics, small budgets, and a defined “kill or scale” decision point—so that your organization learns before adoption becomes urgent?6. Governance and resilience.

Are procurement, security, and compliance teams involved early enough that new AI and quantum services do not create hidden regulatory, data residency, or resilience issues, especially as data centers cluster in specific regions with energy and water constraints?

Strategic scenarios and stance

Scenario 1: Extended AI hegemony (baseline with downside risks).

AI infrastructure keeps compounding, Nvidia and a small set of competitors remain dominant, and quantum delivers only narrow, specialized wins by 2030; the main risks are margin pressure from rising compute costs and over‑reliance on a few suppliers.Scenario 2: Hybrid classical–quantum stack (most likely optimistic‑cautious case).

Cloud providers make it easy to call quantum resources as specialized co‑processors alongside GPUs and CPUs; early production use appears first in sectors like finance, materials, and energy for specific optimization and simulation workloads, without displacing classical AI but complementing it.Scenario 3: Disruptive quantum breakthrough (low‑probability, high‑impact).

A major advance in error correction or qubit technology yields broadly useful, fault‑tolerant machines earlier than expected, creating a sharp advantage for organizations that already have data, talent, and partnerships aligned to exploit them.

The recommended stance is to optimize for Scenario 2 while being robust to 1 and optionally exposed to the upside of 3.

That means doubling down on economically disciplined AI infrastructure today—diversified vendors, workload‑appropriate hardware, and measurable ROI—while reserving a small, explicitly managed slice of budget and attention for quantum pilots, talent development, and ecosystem relationships so that your organization is ready if and when quantum shifts from promising to pivotal.