What Changed?

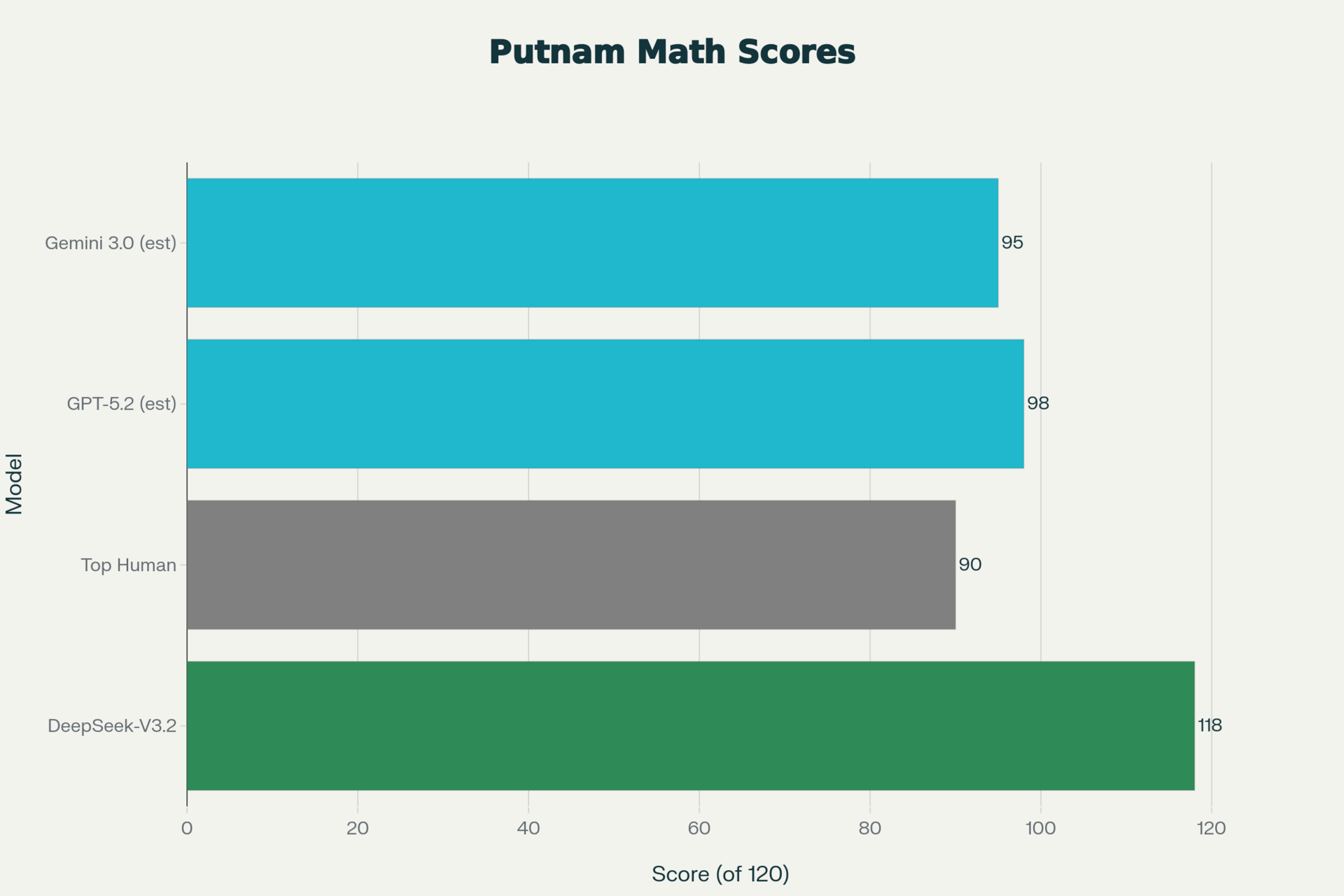

DeepSeek-V3.2 crushes Putnam Math Competition: 118/120 vs top humans at 90

DeepSeek released V3.2 and V3.2-Speciale models that scored 118/120 on the William Lowell Putnam Mathematical Competition - surpassing the highest human scores ever recorded (90 points by a single contestant). This Chinese open-source lab built the first production-ready self-verifiable reasoning system combining proof generators, mathematical verifiers, and meta-verification layers, enabling complex multi-step reasoning that's both autonomous and trustworthy. The models are available immediately via API at pricing that undercuts U.S. frontier models by 3-5x.

So What for Decision-Makers?

Non-Western AI leadership is now real. DeepSeek proves open-source models from China can match (and beat) U.S. frontier capabilities on reasoning benchmarks while maintaining dramatic cost advantages. Enterprises no longer need to bet exclusively on OpenAI/Anthropic/Google for cutting-edge reasoning.

Mathematical reasoning unlocks high-value enterprise workflows. This isn't abstract math - it's directly applicable to financial risk modeling (Monte Carlo simulations, derivatives pricing), supply chain optimization (multi-constraint integer programming), engineering design (structural analysis, tolerance stack-up), and R&D acceleration (molecular dynamics, materials simulation). Where your teams currently spend weeks on complex modeling, autonomous reasoning can deliver verified results in hours.

Agentic AI strategy must prioritize verifiable reasoning over coding fluency. Current enterprise LLMs excel at code generation but fail at multi-step logical validation. DeepSeek's proof system creates a new category: decision agents that don't just suggest solutions but prove them correct, reducing human oversight from 100% to 10%.

Try This Now: Deploy Reasoning Pilot in One Critical Workflow

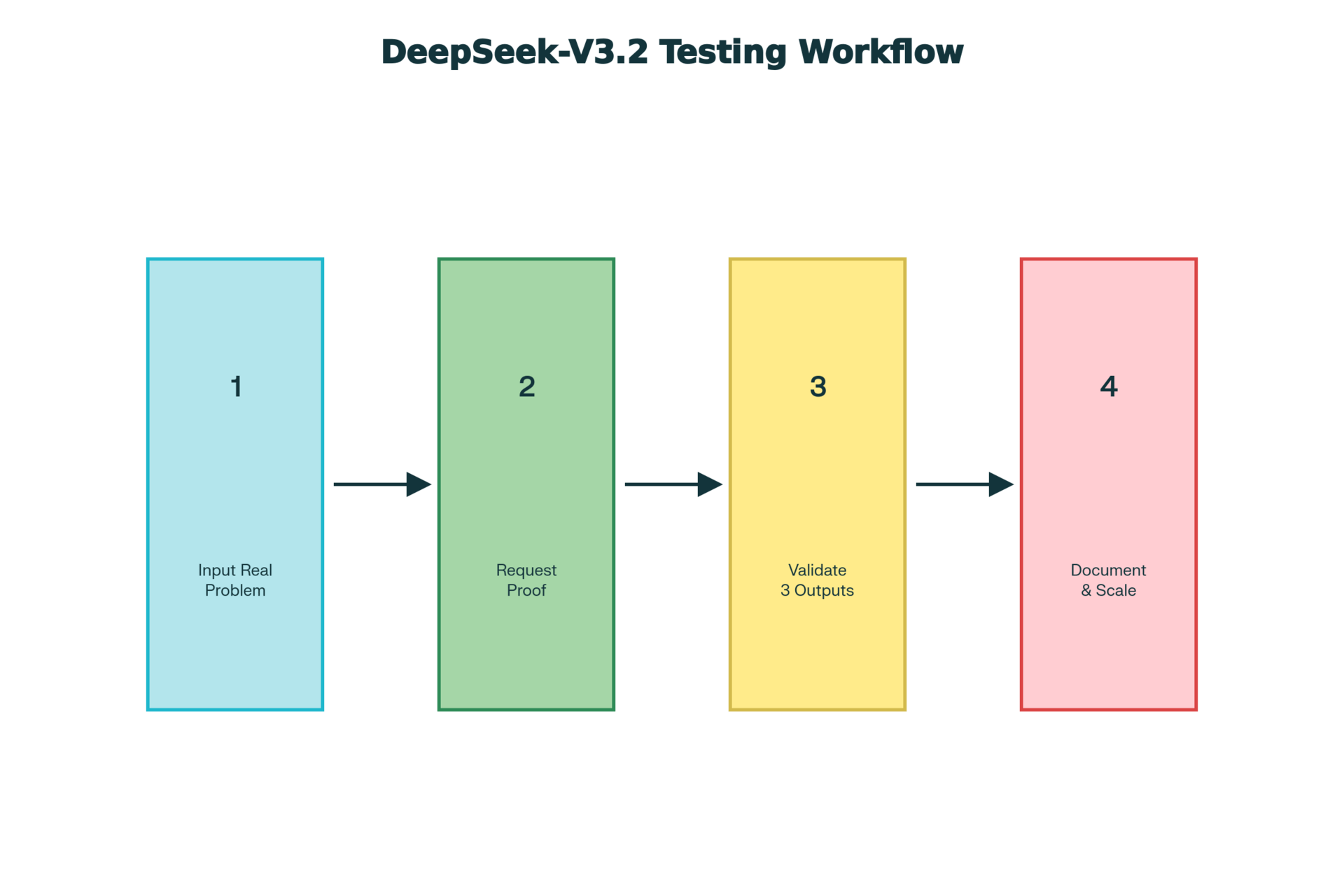

4-Step DeepSeek Reasoning Testing Workflow

Select your highest-ROI analytical bottleneck (financial forecasting, inventory optimization, structural engineering, pharma trial design) and test DeepSeek-V3.2 for 20 minutes:

Input your actual problem with constraints and objectives (don't sanitize - use real data)

Request step-by-step proof of the solution, not just the answer

Validate 3 outputs against your team's known-good results or simulation tools

Document pass/fail rate and assign one engineer to build a proof-verified agent wrapper by Friday

DeepSeek API Playbook: DeepSeek-V3.2 Reasoning Guide (includes prompt templates for optimization, simulation, financial modeling)

Time estimate: 20 minutes testing + 10 minutes documentation

Expected outcome: Quantified evidence of reasoning capability gaps in your current stack, plus first proof-of-concept for autonomous decision agents that could save 20-50% of analyst time on complex modeling

Pro Tip: If your test case passes verification >80%, immediately allocate $5K/month API budget and one FTE to productionize. The reasoning gap between DeepSeek and incumbents will close fast - move now or pay later.

Until next week,

The Yellow Wave